Davide Mulfari has developed a virtual assistant capable of understanding even the words spoken by those who have language problems or difficulties speaking

Virtual assistants are now a fixed presence in smartphones, cars and the homes of millions of people around the world. Alex from Amazon, Siri from Apple or Google Assistant help you do everything from setting up a timer for the oven to buying household products. These tools are activated as soon as they “hear” a sentence but often fail to “understand” the words spoken by people who have difficulty speaking because of a degenerative disease or stroke. To try to solve this problem, the engineer Davide Mulfari developed CapisciAMe, the first virtual assistant designed for those with language difficulties. Davide suffers from dysarthria – a disorder of the phonatory system due to brain injury or nerves that go to the tongue and lips – and thinks that new technologies can help address the needs of people with disabilities. “The goal of my work is to make sure that the virtual assistants on the market can also understand the language of all the disarrays allowing them to take advantage of all the possibilities that are currently denied them.

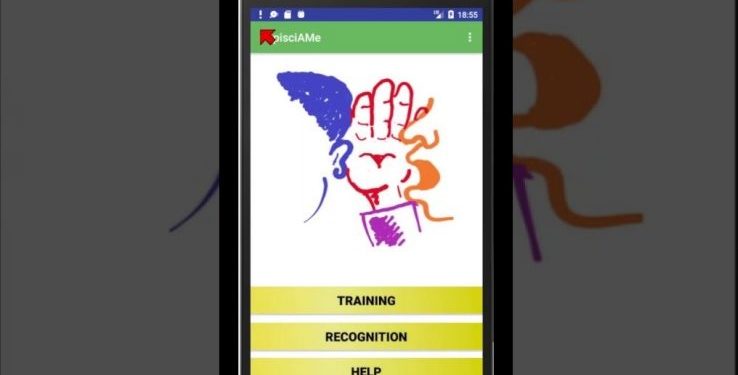

CapisciAMe: the virtual assistant who understands everyone

Davide launched CapisciAMe, a virtual assistant that “uses artificial intelligence to recognize the speech of people with dysarthria. Improving the ability of these systems to recognize the speech of dysarthria has become a very important goal for me. I’m trying to use the potential of artificial intelligence to train a neural network that is able, in a first phase, to recognize a limited number of words in order to be able to use the basic commands of a virtual assistant. To do this, Davide launched a real appeal. By downloading the app from the Play Store, all people with dysarthria will be able to record 13 essential words (including “high”, “low”, “right”, “left”, “close” and “open”) that will be used to train Artificial Intelligence. “These vocal contributions”, explains the creator of the project, “are recorded on the mobile phone and are transmitted to my system via the network. With them I will train the neural network present on my computers. Then the people who have collaborated will be able to use the second part of the same CapisciAMe app, and they will repeat the same words with which they have carried out the training. The system will automatically check if it is able to make an effective recognition and measure the level of accuracy achieved.