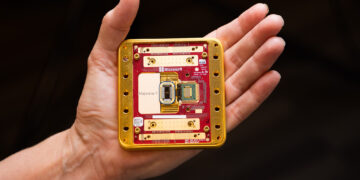

The new model examined tens of thousands of cough shots of Covid-19 patients and identified in 98.5% of the asymptomatic new cases

The pandemic caused by the coronavirus SARS-CoV-2 is reappearing with the arrival of the winter months. The international effort to stem the new spread clashes with the difficulty of tracking new contagions and the detection of asymptomatic diseases. The molecular buffer is the most accurate diagnostic tool but it has high processing times and costs. Other procedures, such as rapid antigenic buffer, rapid tests and serological tests, lack precision, with estimated percentages of up to 70%. A new help could come from Artificial Intelligence.

A research group of the Massachusetts Institute of Technology (MIT) has developed a new model of artificial intelligence able to recognize in 98.5% of cases a asymptomatic patient affected by Covid-19. The analysis is made on an audio recording containing coughing strokes. The researchers have developed the model starting from more than 200 thousand audio recordings, including 70 thousand from Covid-19 patients. The research has been published on the pages of the IEEE Journal of Engineering in Medicine and Biology.

Subirana, co-author of the study, says: “The sounds of talking and coughing are both influenced by the vocal cords and surrounding organs. This means that when you talk, part of your talking is like coughing, and vice versa. It also means that things we easily derive from fluent speech, AI can pick up simply from coughs, including things like the person’s gender, mother tongue, or even emotional state. There’s in fact sentiment embedded in how you cough. So we thought, why don’t we try these Alzheimer’s biomarkers for Covid”. The result was surprising, at the moment the group is working on a smartphone app. The goal is to provide a means of mass diagnosis within reach of everything.

You might also be interested in -> Artificial Intelligence to calculate risk from Covid-19: AI-SCoRE is born