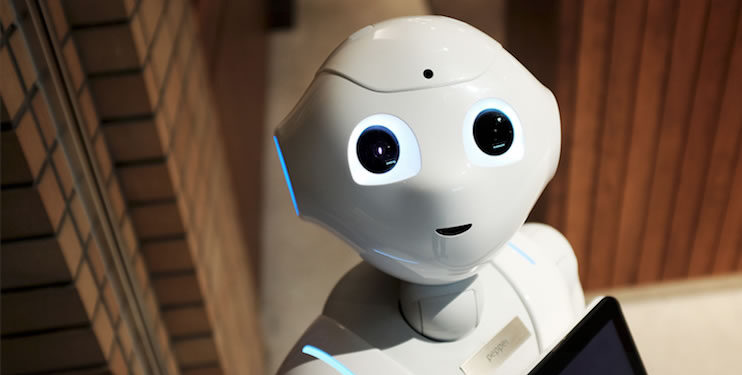

For the first time, a machine was able to predict the actions of another simply by observing its behaviors: is this proof that even robots can feel empathy?

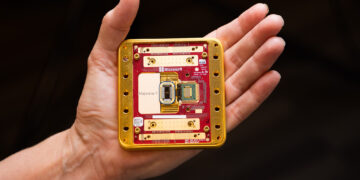

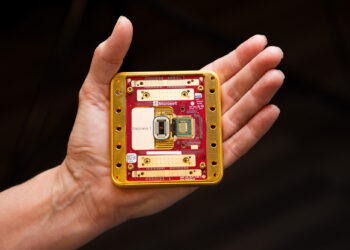

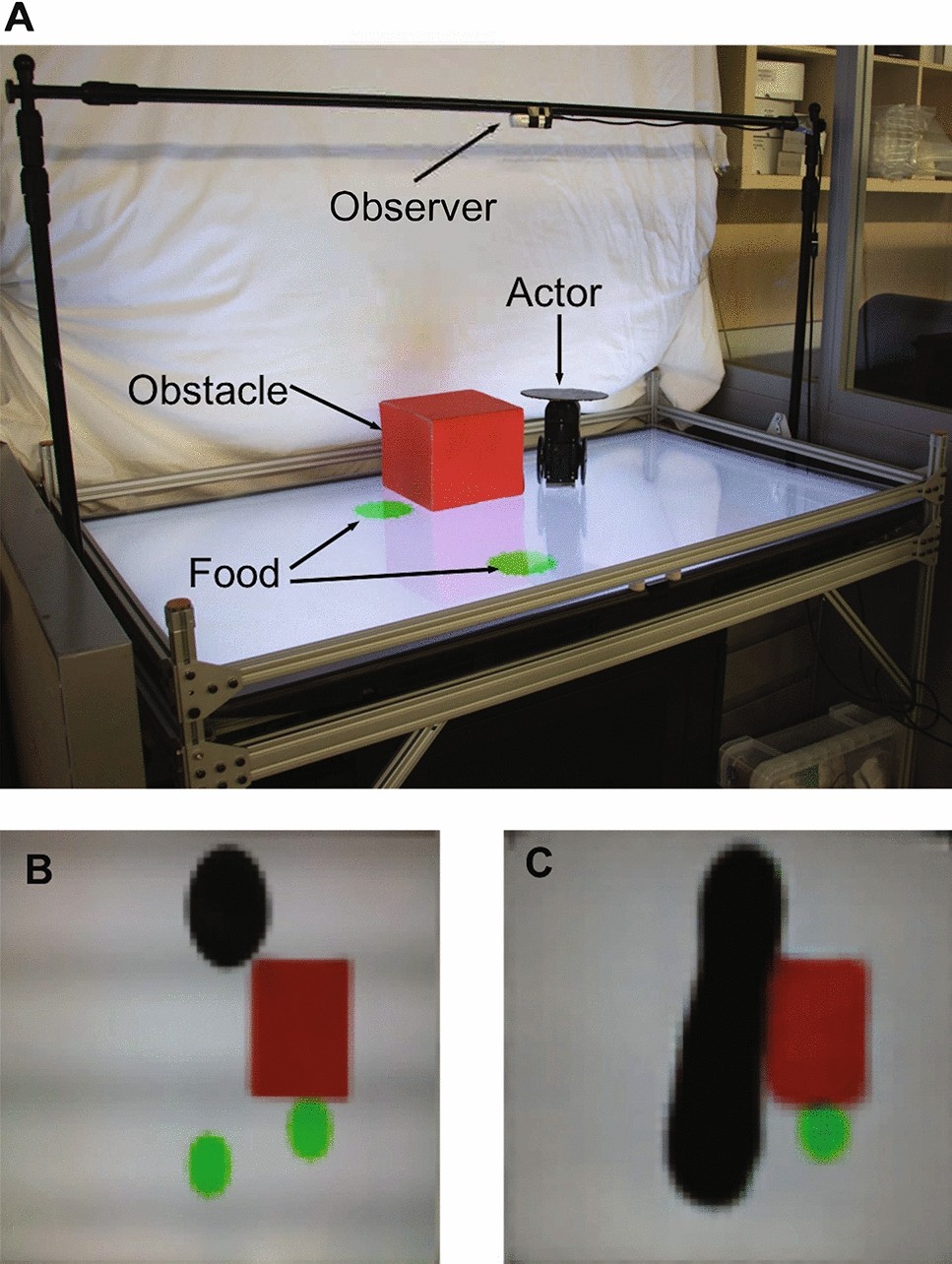

Can robots feel empathy? This question – halfway between science fiction, robotics and artificial intelligence – has tormented scientists and dreamers around the world for decades. Today, however, perhaps a first (partial) answer has arrived. A team of engineers from Columbia University in New York has published an article in the journal Scientific Reports in which it is shown – for the first time – that even robots can “feel empathy”. That is, predict the intentions of another subject simply by observing his actions. The team led by engineer Hod Lipson conducted a very simple experiment with a pair of robots equipped with artificial intelligence. One of the two robots (observer) stands at the top and can “watch” a table.  Here is positioned the second robot (actor) that is free to move to reach some green spots, symbol of food. On the table, there are some obstacles that hide the food from the view of the actor robot. The analysis has shown that in 98% of cases the observer robot can understand when its companion does not have the possibility to see and reach the food. Is that all? Yes, and that’s really a lot. In the study abstract, the authors hypothesize that “such modeling of visual behavior is an essential cognitive capability that will allow machines to understand and coordinate with surrounding agents, avoiding the infamous symbol grounding problem. Through a false belief test, we suggest that this approach may be a precursor to Theory of Mind, one of the hallmarks of primate social cognition.”

Here is positioned the second robot (actor) that is free to move to reach some green spots, symbol of food. On the table, there are some obstacles that hide the food from the view of the actor robot. The analysis has shown that in 98% of cases the observer robot can understand when its companion does not have the possibility to see and reach the food. Is that all? Yes, and that’s really a lot. In the study abstract, the authors hypothesize that “such modeling of visual behavior is an essential cognitive capability that will allow machines to understand and coordinate with surrounding agents, avoiding the infamous symbol grounding problem. Through a false belief test, we suggest that this approach may be a precursor to Theory of Mind, one of the hallmarks of primate social cognition.”

Read also → Building a robotic arm at the age of 16: the feat of Thanos Tziatzioulis

Can robots feel empathy?

Scientist at the Italian Institute of Technology in Genoa Cristina Becchio tried to explain the scope of the discovery of the engineers of Columbia University in New York. “Simply by observing the behaviors of the other – said the scientist – a very simple robot with artificial intelligence was able to predict their behavior. It’s what happens during many of the human interactions, and among machines it occurred without the need to provide any information. All that was needed was a camera mounted on the first robot and pointed at the second one“. The “discovery” itself is very simple but potentially revolutionary. Predictive behaviors (I see what you do and act by predicting what you’ll do a second later) are the basis of all social relationships. The experiment by Columbia University engineers could therefore open the door to a new era of Artificial Intelligence. An era in which robots will be able to interact with humans or other robots without the need for instructions, thanks only to their ability to “observe” and “predict” behavior. Today – Becchio explains – robots equipped with artificial intelligence “carry out much more complex tasks than those described in the Columbia University experiment. But they have the characteristic of reacting to the behavior of others, not to predict it. In this way, interactions lose fluidity. If every time you have to wait for the reaction of the machine, the time is longer. All the social interactions have instead predictive nature“. And it is precisely here that the revolution takes place. “Today’s experiment shows that observing can be sufficient to predict the behavior of others. Once I know how to represent a mental state of others, I can act empathically or manipulate it in a Machiavellian way. Today, an artificial intelligence can take the image of a person standing in the street with their foot up and make them complete the step. A robot capable of predicting behavior will also know how to reconstruct its future path”.

You might also be interested in → New electronic leather that reproduces touch